一、前期提要

2024 年 11 月 30 日官方的 Omnivore 下线了,可以寻找其他解决方案或者自建 Omnivore 版本。Github 的 main 版本 Bug 太多,需要经过一定的修改

Omnivore 相对与其他软件的优点: 订阅管理 、高亮笔记、高亮标记、自动保持阅读进度。相对我来说是 Tiny Tiny RSS+Readeck的结合版本。有以下问题需要注意

但是 TTRSS 有编码转换和获取全文的插件,在使用Omnivore订阅时可能在某些网站会有问题。

Readeck 可以导出 EPUB 离线观看,Omnivore 不支持。

以下教程采用 K8S 部署,群晖或者 Docker 推荐查看这个教程 马上停服?别怕,手把手教你在NAS上部署稍后阅读神器「Omnivore」!打造自己的文章知识库!。部署后部分功能可用,以下是已知的问题。

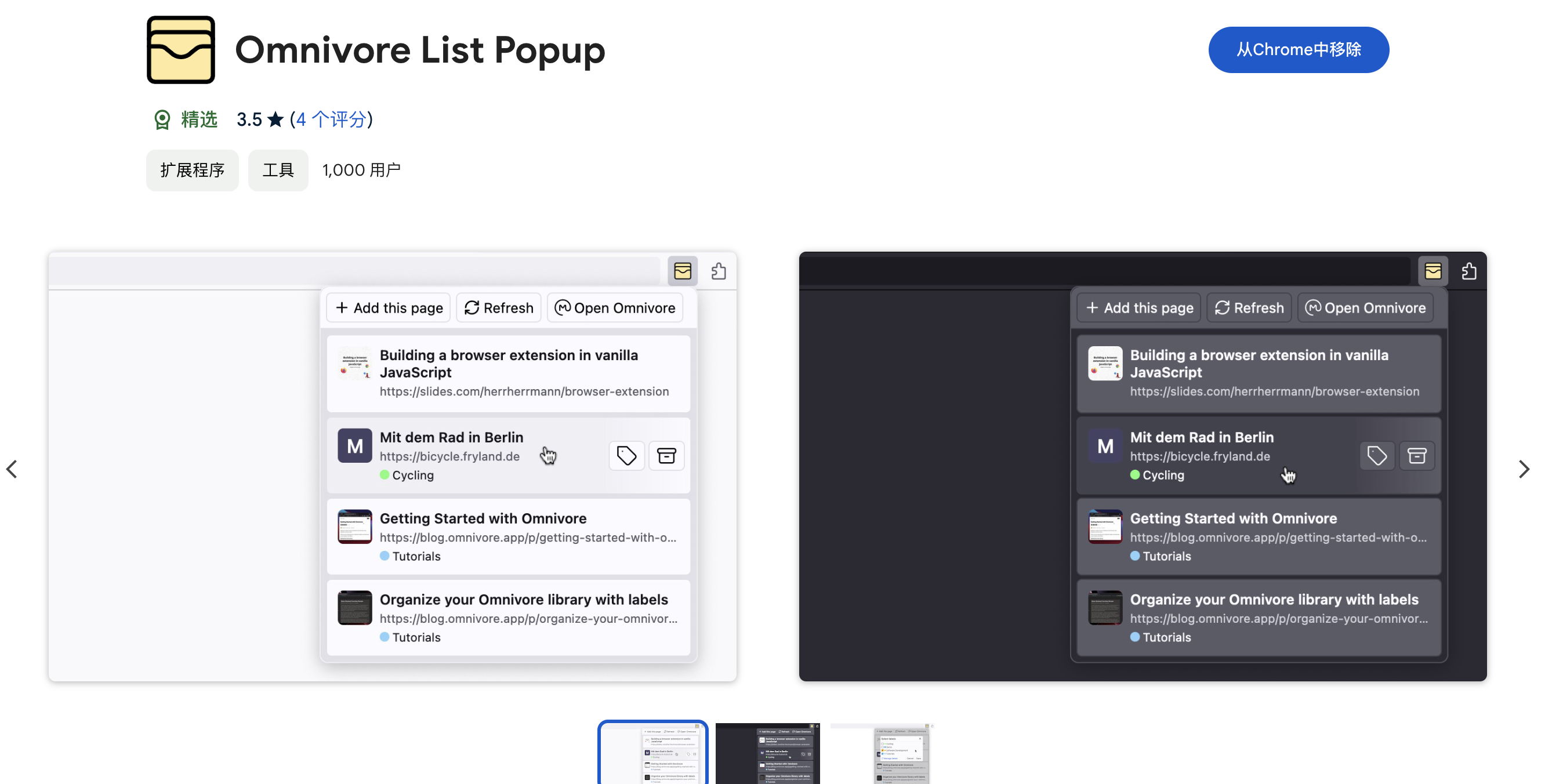

Chrom 官方插件无法使用,可以使用修改后的Omnivore List Popup进行保存文章。✅

Mac 的客户端可以正常使用,但偶尔会不正常工作。❓

Android & iOS 的客户端无法正常使用。❌

二、部署步骤

2.1 镜像地址

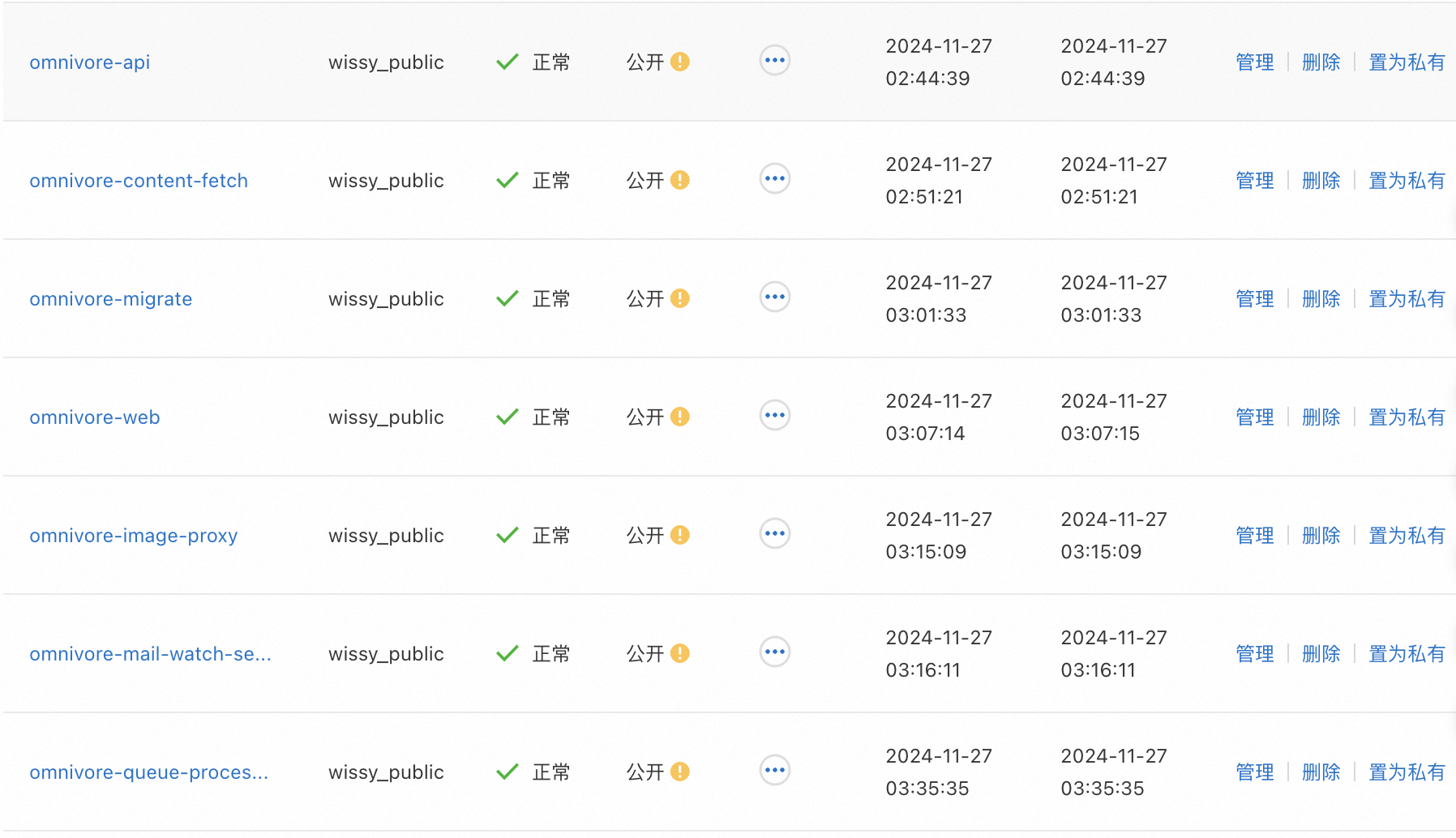

魔改后的版本 Docker 镜像获取在“Ai日记哇”公众号回复“稍后阅读”。也可以使用我上传到 Aliyun的镜像,镜像地址:registry.cn-hangzhou.aliyuncs.com/wissy_public/ omnivore-content-fetch(替换其他服务)

2.2 部署数据库

配置文件

pv.yaml(持久化)

apiVersion: v1 kind: PersistentVolumeClaim metadata: name: omnivore-data namespace: ibest spec: accessModes: - ReadWriteOnce resources: requests: storage: 10Gi storageClassName: node1-nfs-client(替换成自己的StorageClass)deployment.yaml(负载清单)

apiVersion: apps/v1 kind: Deployment metadata: name: omnivore-db namespace: ibest labels: app: omnivore-db spec: replicas: 1 strategy: type: Recreate selector: matchLabels: app: omnivore-db template: metadata: labels: app: omnivore-db spec: hostname: db containers: - name: redis image: redis:7.2.4 imagePullPolicy: IfNotPresent ports: - name: "redis-port" containerPort: 6379 protocol: TCP volumeMounts: - name: omnivore-data subPath: redis mountPath: /data resources: requests: memory: '128Mi' cpu: '100m' limits: memory: '4Gi' cpu: '1000m' - name: postgres image: ankane/pgvector:v0.5.1 imagePullPolicy: IfNotPresent envFrom: - configMapRef: name: omnivore-env ports: - name: "postgres-port" containerPort: 5432 protocol: TCP volumeMounts: - name: omnivore-data subPath: postgresql mountPath: /var/lib/postgresql/data resources: requests: memory: '128Mi' cpu: '100m' limits: memory: '4Gi' cpu: '1000m' - name: minio image: minio/minio:latest imagePullPolicy: IfNotPresent env: - name: MINIO_ACCESS_KEY value: "ACCESS_KEY(请替换)" - name: MINIO_SECRET_KEY value: "SECRET_KEY(请替换)" - name: AWS_S3_ENDPOINT_URL value: "http://omnivore-db.ibest:9000" args: [ "server","/data" ] ports: - name: "minio-port" containerPort: 9000 protocol: TCP volumeMounts: - name: omnivore-data subPath: minio mountPath: /data resources: requests: memory: '128Mi' cpu: '100m' limits: memory: '4Gi' cpu: '1000m' dnsPolicy: ClusterFirstWithHostNet volumes: - name: omnivore-data persistentVolumeClaim: claimName: omnivore-dataservice.yaml(服务清单)

apiVersion: v1 kind: Service metadata: name: omnivore-db namespace: ibest labels: app: omnivore-db spec: selector: app: omnivore-db ports: - name: "redis" protocol: TCP targetPort: 6379 port: 6379 - name: "postgres" protocol: TCP targetPort: 5432 port: 5432 - name: "minio" protocol: TCP targetPort: 9000 port: 9000

应用部署

kubectl apply -f pv.yaml

kubectl apply -f deployment.yaml

kubectl apply -f service.yaml

2.3 部署 Omnivore

配置文件

configmap.yaml(配置清单)

apiVersion: v1 kind: ConfigMap metadata: name: omnivore-env namespace: ibest data: # Postgres & Migrate POSTGRES_USER: "postgres" POSTGRES_PASSWORD: "postgres(请替换)" POSTGRES_DB: "omnivore" POSTGRES_HOST: "omnivore-db.ibest" PGPASSWORD: "postgres" PG_HOST: "omnivore-db.ibest" PG_PASSWORD: "app_pass(请替换)" PG_DB: "omnivore" PG_USER: "app_user(请替换)" PG_PORT: "5432" PG_POOL_MAX: "20" # API API_ENV: "local" IMAGE_PROXY_SECRET: "123456789(请替换)" JWT_SECRET: "123456789(请替换)" SSO_JWT_SECRET: "123456789(请替换)" GATEWAY_URL: "http://omnivore.ibest:4000/api" CONTENT_FETCH_URL: "http://omnivore.ibest:9090/?token=123456789(请替换)" GCS_USE_LOCAL_HOST: "true" GCS_UPLOAD_BUCKET: "omnivore" AUTO_VERIFY: "true" AWS_ACCESS_KEY_ID: "ACCESS_KEY(请替换)" # Used for Minio S3 Client AWS_SECRET_ACCESS_KEY: "SECRET_KEY(请替换)" AWS_REGION: "us-east-1" CONTENT_FETCH_QUEUE_ENABLED: "true" IMAGE_PROXY_URL: "https://omnivore_image.example.com" # Need to change this for NGINX CLIENT_URL: "https://omnivore.example.com(请替换)" # Need to change this when using NGINX LOCAL_MINIO_URL: "https://omnivore_minio.example.com(请替换)" # Redis REDIS_URL: "redis://omnivore-db.ibest:6379/0" MQ_REDIS_URL: "redis://omnivore-db.ibest:6379/0" #MAIL WATCHER_API_KEY: "123456789(请替换)" LOCAL_EMAIL_DOMAIN: "domain.tld" SNS_ARN: "arn_of_sns" #for if you use SES and SNS for Email. # Web APP_ENV: "prod" NEXT_PUBLIC_APP_ENV: "prod" # BASE_URL: "http://192.168.66.5:3000" # Front End - Need to change this when using NGINX # SERVER_BASE_URL: "http://192.168.66.5:4000" # API Server, need to change this when using NGINX # HIGHLIGHTS_BASE_URL: "http://192.168.66.5:3000" # Front End - Need to change this when using NGINX # NEXT_PUBLIC_BASE_URL: "http://192.168.66.5:3000" # Front End - Need to change this when using NGINX # NEXT_PUBLIC_SERVER_BASE_URL: "http://192.168.66.5:4000" # API Server, need to change this when using NGINX # NEXT_PUBLIC_HIGHLIGHTS_BASE_URL: "http://192.168.66.5:3000" # Front End - Need to change this when using NGINX BASE_URL: "https://omnivore.example.com(请替换)" # Front End - Need to change this when using NGINX SERVER_BASE_URL: "https://omnivore_api.example.com(请替换)" # API Server, need to change this when using NGINX HIGHLIGHTS_BASE_URL: "https://omnivore.example.com(请替换)" # Front End - Need to change this when using NGINX NEXT_PUBLIC_BASE_URL: "https://omnivore.example.com(请替换)" # Front End - Need to change this when using NGINX NEXT_PUBLIC_SERVER_BASE_URL: "https://omnivore_api.example.com(请替换)" # API Server, need to change this when using NGINX NEXT_PUBLIC_HIGHLIGHTS_BASE_URL: "https://omnivore.example.com(请替换)" # Front End - Need to change this when using NGINX # Content Fetch VERIFICATION_TOKEN: "123456789(请替换)" REST_BACKEND_ENDPOINT: "http://omnivore.ibest:4000/api" SKIP_UPLOAD_ORIGINAL: "true" # Minio MINIO_ACCESS_KEY: "ACCESS_KEY(请替换)" MINIO_SECRET_KEY: "SECRET_KEY(请替换)" AWS_S3_ENDPOINT_URL: "http://omnivore-db.ibest:9000"deployment.yaml(负载清单)

apiVersion: apps/v1 kind: Deployment metadata: name: omnivore namespace: ibest labels: app: omnivore spec: replicas: 1 strategy: type: Recreate selector: matchLabels: app: omnivore template: metadata: labels: app: omnivore spec: hostname: omnivore initContainers: - name: migrate image: registry.cn-hangzhou.aliyuncs.com/wissy_public/omnivore-migrate:latest imagePullPolicy: Always envFrom: - configMapRef: name: omnivore-env command: [ "/bin/sh","-c" ] args: [ "sed -i 's#tail -f /dev/null##g' ./packages/db/setup.sh ; ./packages/db/setup.sh" ] - name: createbuckets image: minio/mc:latest imagePullPolicy: Always env: - name: MINIO_ACCESS_KEY value: "ACCESS_KEY(请替换)" - name: MINIO_SECRET_KEY value: "SECRET_KEY(请替换)" - name: BUCKET_NAME value: "omnivore" - name: ENDPOINT value: "http://omnivore-db.ibest:9000" - name: AWS_S3_ENDPOINT_URL value: "http://omnivore-db.ibest:9000" command: [ "/bin/sh", "-c", "/usr/bin/mc config host add myminio ${ENDPOINT} ${MINIO_ACCESS_KEY} ${MINIO_SECRET_KEY} && /usr/bin/mc mb myminio/omnivore && /usr/bin/mc policy set public myminio/omnivore || echo 'quit'" ] containers: - name: content-fetch image: registry.cn-hangzhou.aliyuncs.com/wissy_public/omnivore-content-fetch:latest imagePullPolicy: Always env: - name: USE_FIREFOX value: "true" - name: PORT value: "9090" envFrom: - configMapRef: name: omnivore-env ports: - name: "http-port" containerPort: 9090 protocol: TCP resources: requests: memory: '128Mi' cpu: '100m' limits: memory: '4Gi' cpu: '1000m' - name: api image: registry.cn-hangzhou.aliyuncs.com/wissy_public/omnivore-api:latest imagePullPolicy: Always env: - name: PORT value: "4000" envFrom: - configMapRef: name: omnivore-env ports: - name: "http-port" containerPort: 4000 protocol: TCP resources: requests: memory: '128Mi' cpu: '100m' limits: memory: '4Gi' cpu: '1000m' - name: queue-processor image: registry.cn-hangzhou.aliyuncs.com/wissy_public/omnivore-queue-processor:latest imagePullPolicy: Always envFrom: - configMapRef: name: omnivore-env resources: requests: memory: '128Mi' cpu: '100m' limits: memory: '4Gi' cpu: '1000m' - name: mail-watch-server image: registry.cn-hangzhou.aliyuncs.com/wissy_public/omnivore-mail-watch-server:latest imagePullPolicy: Always env: - name: PORT value: "4398" envFrom: - configMapRef: name: omnivore-env ports: - name: "http-port" containerPort: 4398 protocol: TCP resources: requests: memory: '128Mi' cpu: '100m' limits: memory: '4Gi' cpu: '1000m' - name: image-proxy image: registry.cn-hangzhou.aliyuncs.com/wissy_public/omnivore-image-proxy:latest imagePullPolicy: Always envFrom: - configMapRef: name: omnivore-env ports: - name: "http-port" containerPort: 8080 protocol: TCP resources: requests: memory: '128Mi' cpu: '100m' limits: memory: '4Gi' cpu: '1000m' - name: web image: registry.cn-hangzhou.aliyuncs.com/wissy_public/omnivore-web:latest imagePullPolicy: Always env: - name: PORT value: "3000" envFrom: - configMapRef: name: omnivore-env # command: [ "tail","-f","/dev/null" ] args: [ "yarn", "workspace", "@omnivore/web", "start" ] ports: - name: "http-port" containerPort: 3000 protocol: TCP # resources: # requests: # memory: '128Mi' # cpu: '100m' # limits: # memory: '4Gi' # cpu: '1000m' dnsPolicy: ClusterFirstWithHostNetservice.yaml(服务清单)

apiVersion: v1 kind: Service metadata: name: omnivore namespace: ibest labels: app: omnivore spec: selector: app: omnivore ports: - name: "content-fetch" protocol: TCP targetPort: 9090 port: 9090 - name: "api" protocol: TCP targetPort: 4000 port: 4000 - name: "mail-watch-server" protocol: TCP targetPort: 4398 port: 4398 - name: "image-proxy" protocol: TCP targetPort: 8080 port: 7070 - name: "web" protocol: TCP targetPort: 3000 port: 3000

应用部署

kubectl apply -f configmap.yaml

kubectl apply -f deployment.yaml

kubectl apply -f service.yaml

2.3 配置 Apisix 路由

apiVersion: apisix.apache.org/v2

kind: ApisixRoute

metadata:

namespace: ibest

name: omnivore-route

spec:

http:

- name: web

match:

hosts:

- omnivore.example.com.cn(请替换)

paths:

- /*

backends:

- serviceName: omnivore

servicePort: 3000

resolveGranularity: "service"

websocket: true

plugins:

- name: request-id

enable: true

- name: redirect

enable: true

config:

http_to_https: true

- name: real-ip

enable: true

config:

source: http_x_forwarded_for

#- name: uri-blocker

# enable: true

# config:

# block_rules:

# - "auth/email-signup"

# - "auth/forgot-password"

- name: api

match:

hosts:

- omnivore_api.example.com(请替换)

paths:

- /*

backends:

- serviceName: omnivore

servicePort: 4000

resolveGranularity: "service"

websocket: true

plugins:

- name: request-id

enable: true

- name: redirect

enable: true

config:

http_to_https: true

- name: real-ip

enable: true

config:

source: http_x_forwarded_for

#- name: uri-blocker

# enable: true

# config:

# block_rules:

# - "auth/email-signup"

# - "auth/forgot-password"

- name: image

match:

hosts:

- omnivore_image.example.com(请替换)

paths:

- /*

backends:

- serviceName: omnivore

servicePort: 7070

resolveGranularity: "service"

websocket: true

plugins:

- name: request-id

enable: true

- name: redirect

enable: true

config:

http_to_https: true

- name: real-ip

enable: true

config:

source: http_x_forwarded_for

- name: minio

match:

hosts:

- omnivore_minio.example.com(请替换)

paths:

- /*

backends:

- serviceName: omnivore-db

servicePort: 9000

resolveGranularity: "service"

websocket: true

plugins:

- name: request-id

enable: true

- name: redirect

enable: true

config:

http_to_https: true

- name: real-ip

enable: true

config:

source: http_x_forwarded_for

2.4 修改 Omnivore List Popup 插件

在 Chrom 商店下载并安装插件

在插件管理页面查看并复制插件 ID

复制插件到电脑

~/plugins目录cp -r "/Users/wissy/Library/Application Support/Google/Chrome/Default/Extensions/1.9.1_0" ~/plugins/omnivore导入 IDEA 中并修改

将 omnivore.app 修改为实际的地址

将 api-prod.omnivore.app 修改为实际的地址

加载并启用(需要配置 API Key 后方可正常显示)

三、注意事项

账号注册完成,可以将路由中的

uri-blocker打开,以防止其他人恶意注册。

web容器在启动的时候进行编译,1CU 无法完成编译,需要增大资源或者不限制。如果不想要 demo 账号可以使用

配置清单中增加NO_DEMO=1,已经运行过migrate后,可以在数据库中修改status 字段。

评论区